Court Rules AI Breaks Equality Laws

| Published date | 25 November 2020 |

| Subject Matter | Privacy, Data Protection, Privacy Protection |

| Law Firm | Hill Dickinson |

| Author | David Hill, Eleanor Tunnicliffe and Richard Parker |

The Court of Appeal decided that South Wales Police had not done enough to make sure that the technology it was using was not biased in this way. The Court said:

'The fact remains, however, that SWP has never sought to satisfy themselves, either directly or by way of independent verification, that the software program in this case does not have an unacceptable bias on grounds of race or sex. There is evidence, in particular from Dr Jain, that programs for AFR can sometimes have such a bias.'

Addressing the interference in people's privacy, the Court also said that the fact that the new technology was only being trialled was no defence to the requirement to act in accordance with the law.

So, developers and purchasers of AI in the public sector will need to find ways of demonstrating that they have investigated the question of whether the technology may be biased against different groups of people, and ensure that measures are put in place to mitigate against the risk of bias or discrimination. It is important to understand that the Court of Appeal does not go so far to say that the technology must be completely free from bias. Rather, the organisations using it must have investigated whether there is any bias and taken this into account when deciding whether to adopt the technology. Given that the relevant data protection, privacy and equalities duties are...

To continue reading

Request your trialSubscribers can access the reported version of this case.

You can sign up for a trial and make the most of our service including these benefits.

Why Sign-up to vLex?

-

Over 100 Countries

Search over 120 million documents from over 100 countries including primary and secondary collections of legislation, case law, regulations, practical law, news, forms and contracts, books, journals, and more.

-

Thousands of Data Sources

Updated daily, vLex brings together legal information from over 750 publishing partners, providing access to over 2,500 legal and news sources from the world’s leading publishers.

-

Find What You Need, Quickly

Advanced A.I. technology developed exclusively by vLex editorially enriches legal information to make it accessible, with instant translation into 14 languages for enhanced discoverability and comparative research.

-

Over 2 million registered users

Founded over 20 years ago, vLex provides a first-class and comprehensive service for lawyers, law firms, government departments, and law schools around the world.

Subscribers are able to see a list of all the cited cases and legislation of a document.

You can sign up for a trial and make the most of our service including these benefits.

Why Sign-up to vLex?

-

Over 100 Countries

Search over 120 million documents from over 100 countries including primary and secondary collections of legislation, case law, regulations, practical law, news, forms and contracts, books, journals, and more.

-

Thousands of Data Sources

Updated daily, vLex brings together legal information from over 750 publishing partners, providing access to over 2,500 legal and news sources from the world’s leading publishers.

-

Find What You Need, Quickly

Advanced A.I. technology developed exclusively by vLex editorially enriches legal information to make it accessible, with instant translation into 14 languages for enhanced discoverability and comparative research.

-

Over 2 million registered users

Founded over 20 years ago, vLex provides a first-class and comprehensive service for lawyers, law firms, government departments, and law schools around the world.

Subscribers are able to see a list of all the documents that have cited the case.

You can sign up for a trial and make the most of our service including these benefits.

Why Sign-up to vLex?

-

Over 100 Countries

Search over 120 million documents from over 100 countries including primary and secondary collections of legislation, case law, regulations, practical law, news, forms and contracts, books, journals, and more.

-

Thousands of Data Sources

Updated daily, vLex brings together legal information from over 750 publishing partners, providing access to over 2,500 legal and news sources from the world’s leading publishers.

-

Find What You Need, Quickly

Advanced A.I. technology developed exclusively by vLex editorially enriches legal information to make it accessible, with instant translation into 14 languages for enhanced discoverability and comparative research.

-

Over 2 million registered users

Founded over 20 years ago, vLex provides a first-class and comprehensive service for lawyers, law firms, government departments, and law schools around the world.

Subscribers are able to see the revised versions of legislation with amendments.

You can sign up for a trial and make the most of our service including these benefits.

Why Sign-up to vLex?

-

Over 100 Countries

Search over 120 million documents from over 100 countries including primary and secondary collections of legislation, case law, regulations, practical law, news, forms and contracts, books, journals, and more.

-

Thousands of Data Sources

Updated daily, vLex brings together legal information from over 750 publishing partners, providing access to over 2,500 legal and news sources from the world’s leading publishers.

-

Find What You Need, Quickly

Advanced A.I. technology developed exclusively by vLex editorially enriches legal information to make it accessible, with instant translation into 14 languages for enhanced discoverability and comparative research.

-

Over 2 million registered users

Founded over 20 years ago, vLex provides a first-class and comprehensive service for lawyers, law firms, government departments, and law schools around the world.

Subscribers are able to see any amendments made to the case.

You can sign up for a trial and make the most of our service including these benefits.

Why Sign-up to vLex?

-

Over 100 Countries

Search over 120 million documents from over 100 countries including primary and secondary collections of legislation, case law, regulations, practical law, news, forms and contracts, books, journals, and more.

-

Thousands of Data Sources

Updated daily, vLex brings together legal information from over 750 publishing partners, providing access to over 2,500 legal and news sources from the world’s leading publishers.

-

Find What You Need, Quickly

Advanced A.I. technology developed exclusively by vLex editorially enriches legal information to make it accessible, with instant translation into 14 languages for enhanced discoverability and comparative research.

-

Over 2 million registered users

Founded over 20 years ago, vLex provides a first-class and comprehensive service for lawyers, law firms, government departments, and law schools around the world.

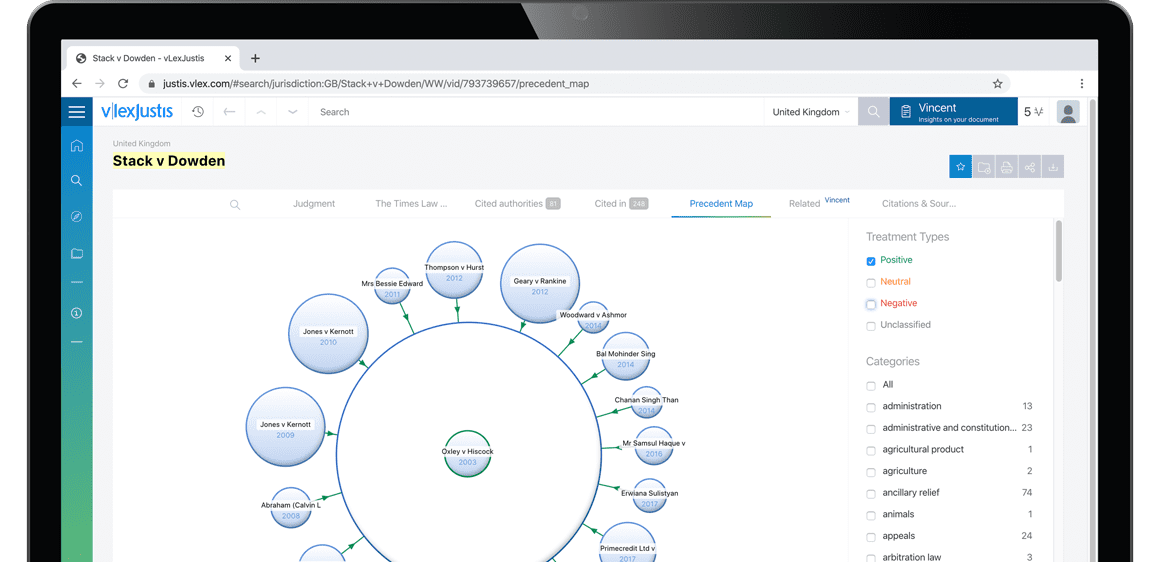

Subscribers are able to see a visualisation of a case and its relationships to other cases. An alternative to lists of cases, the Precedent Map makes it easier to establish which ones may be of most relevance to your research and prioritise further reading. You also get a useful overview of how the case was received.

Why Sign-up to vLex?

-

Over 100 Countries

Search over 120 million documents from over 100 countries including primary and secondary collections of legislation, case law, regulations, practical law, news, forms and contracts, books, journals, and more.

-

Thousands of Data Sources

Updated daily, vLex brings together legal information from over 750 publishing partners, providing access to over 2,500 legal and news sources from the world’s leading publishers.

-

Find What You Need, Quickly

Advanced A.I. technology developed exclusively by vLex editorially enriches legal information to make it accessible, with instant translation into 14 languages for enhanced discoverability and comparative research.

-

Over 2 million registered users

Founded over 20 years ago, vLex provides a first-class and comprehensive service for lawyers, law firms, government departments, and law schools around the world.

Subscribers are able to see the list of results connected to your document through the topics and citations Vincent found.

You can sign up for a trial and make the most of our service including these benefits.

Why Sign-up to vLex?

-

Over 100 Countries

Search over 120 million documents from over 100 countries including primary and secondary collections of legislation, case law, regulations, practical law, news, forms and contracts, books, journals, and more.

-

Thousands of Data Sources

Updated daily, vLex brings together legal information from over 750 publishing partners, providing access to over 2,500 legal and news sources from the world’s leading publishers.

-

Find What You Need, Quickly

Advanced A.I. technology developed exclusively by vLex editorially enriches legal information to make it accessible, with instant translation into 14 languages for enhanced discoverability and comparative research.

-

Over 2 million registered users

Founded over 20 years ago, vLex provides a first-class and comprehensive service for lawyers, law firms, government departments, and law schools around the world.