Trends In Information Technology Law: Looking Ahead To 2016

This piece looks ahead to what we might expect as IT law developments in 2016.

More than most years, looking ahead to what's in store for IT lawyers next year is all about the big picture: how IT developments will impact businesses generally, and how this will affect what comes across IT lawyers' desks. Two key words for next year are 'scale' and 'disruption'. IT developments are now at sufficient scale that even year on year increases have an increasingly large impact; and these changes, characterised as 'digital transformation' by research consultancy Gartner, will start to disrupt established business patterns more significantly. What's behind these changes is the rapid market adoption and convergence of artificial intelligence (AI) and the cloud, two separate but related areas of technology development that are transforming IT, each with data at its heart.

Artificial Intelligence

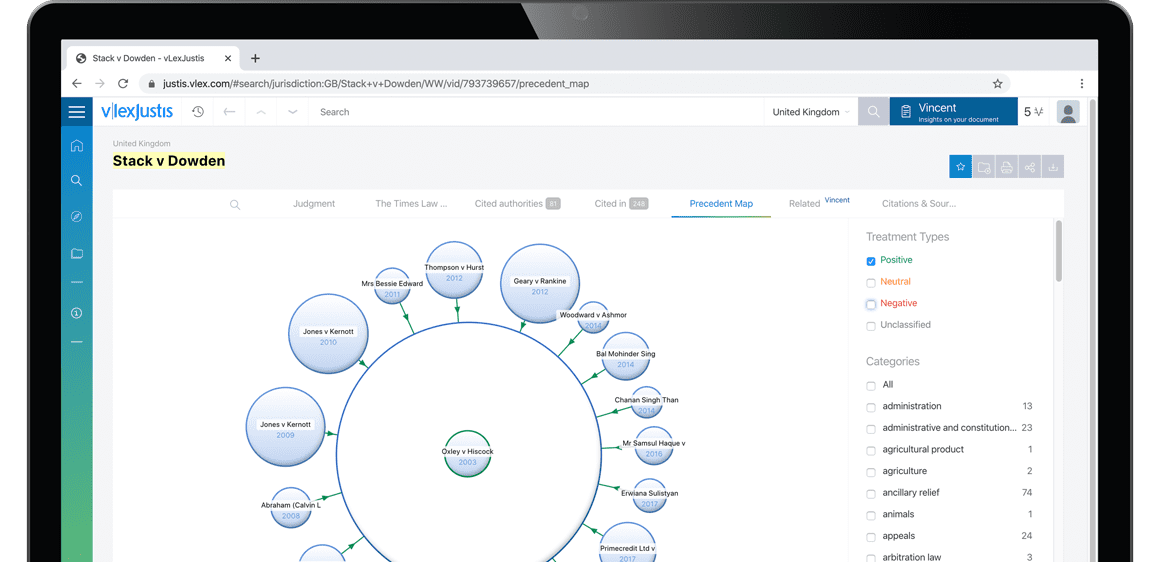

AI, as the convergence of four separate, rapidly advancing machine technologies - processing, learning, perception and control (see Figure 1 below) - will move to the mainstream in 2016.

Machine processing is fuelled Moore's law, the fifty year old empirical rule the computer processor speeds double every 18 to 24 months. The basic building block of IT, Moore's law still has some oomph left but is starting to run out of steam as ever higher microprocessor density on the chip produces excess heat and adverse side effects: Intel announced in mid-2015 that its 10nm (nanometre) Cannonlake processor would be delayed until 2017.

Machine learning is the process by which computers teach themselves to carry out pattern recognition tasks without being explicitly programmed. They do this by analysing and processing very large datasets using algorithms called neural networks as they mimic the human brain. For example, a computer may teach itself to recognise a human face by breaking down input information into layers and passing data from layer to increasingly abstract layer until the final output layer can categorise the image as a particular face. What is novel is that the size of the datasets means that the computer itself can generate the rules by which data passes between layers. Voice recognition is another area where machine learning is developing quickly as the error count now has been reduced to below 10% and companies like Apple, Google and Microsoft invest heavily in their Siri, Google Now and Cortana virtual assistants - a growth area for 2016.

Machine learning techniques combine with increasingly powerful and cheap cameras and sensors to accelerate machine perception - the ability of processors to analyse any digital data to accurately recognise and describe people, objects and actions. With internet connected sensors set to increase from 5bn today to between 20bn and 30bn by 2020, machine...

To continue reading

Request your trial